I’ve watched the birth and toddlerhood of all of the major configuration management tools (Puppet, Cfengine, Chef, Bcfg2, and so on) and have had the pleasure of knowing and interacting with almost all of their parents over the years. Recently I decided it was high time I get my hands dirty by leading a substantial deployment of one of the tools at $WORK. The first tool I thought I would tackle would be Puppet.

In the process of planning for this effort, I’ve identified what appears to be a fairly large usability/workflow gap in Puppet1. I’ve tried to talk to a whole bunch of people about how they do things, but as far as I can tell everyone is still making due with fairly rickety rope bridges to get over the gap. It is entirely possible I’ve overlooked something obvious or the problem isn’t as big as the amount of scrutiny I’ve given it. But something in my long-time sysadmin heart tells me we could be doing much better. I’d like to see if I can pose a clear and cogent problem statement here and see if others can help me figure out what I’m missing.

What’s the Problem?

One of the most common scenarios for how Puppet is used seems (to me) to have a “best practices” workflow that is unclear at best and unwieldy at worst. Given how often a sysadmin performs this workflow, I’d really like to know if there is a better way. If there isn’t, I’d like to work with people to invent one. Please read on for the gory details…

The Setup

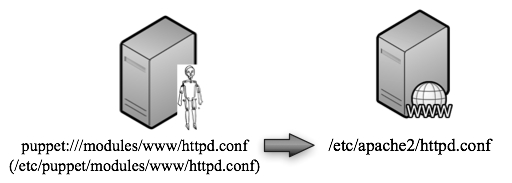

Let’s see the players in a super simple setup that I think provides a good demonstration of my quandary. Let’s say we have a web server host of some sort2 called www configured through a httpd.conf file:

We’ll want this configuration file to be managed by Puppet, so let’s bring in a central Puppet server called puppetmaster. This server will store a copy of httpd.conf in its local datastore to be served out to Puppet clients:

We’ll assume that there is a Puppet client running on www. It is configured in the default manner to wake up every N minutes3 and ask the server for changes to its config, pull down them down, and implement them. If a change is made locally on www, but the change is not propagated to the Puppet-managed config, it will be overwritten.

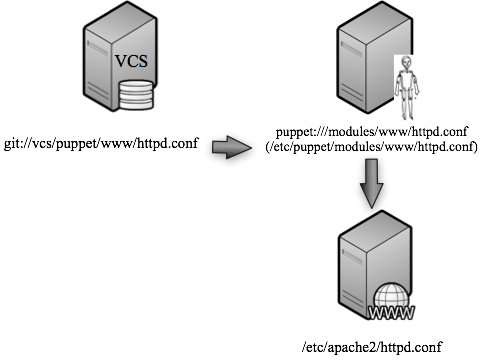

It is a best practice for a number of reasons for users to never directly edit the file that the Puppet server sees on disk. Instead, we’ll want the Puppet server to work from a copy of the file that is stored in some version control system. Indeed, even Introduction to Puppet on the official website shows this picture:

with nary a comment about the SVN box, probably because the value of using a VCS is well accepted. I’ll add a similar version control host to my example. The version control system could actually reside on the same host as any of the other components we’ve described but I’m going to break it out for simplicity (and best practices) sake. Let’s call it vcs and add it to the diagram we’ve been building:

As far as I can tell, there’s nothing new or remotely special about the setup I’ve described. Now let’s start to build the puzzle.

Question 1: Where Should You Edit the Web Server’s Config File?

Ok, it’s showtime! Let’s change the web server’s running configuration. For yuks, let’s assume this will be a substantial multi-line change and it is one I’m doing by hand4. Changes like this are often iterative, meaning I make a change, test it, fix any problems, test, fix more, and so on.

So where do I do this “iterative development” of the config? Here are two choices:

- Right there on the web server, in situ where the config file lives.

First we stop Puppet (don’t want our changes overwritten by the regular update cycle!5 ), then we edit. Once we are done editing, we then have to get the changed file into our version control repository and then from there into the Puppet repository6. At that point we can restart Puppet7. - In some working directory, someplace (doesn’t matter where), that is “checked out/cloned” for editing from the version control system.

Each time I want to test a change, I have to commit the changes back to the VCS and either wait for it to propagate back to the web server or somehow “push” the process to make it happen sooner than the usual interval. Once it gets to the server, either Puppet or I have to tell the server to reload its config. I can then test if this change was correct. If not, edit the local copy, commit it again. Rinse and repeat.

Let’s examine the pros and cons of each of these options.

The In Situ Option

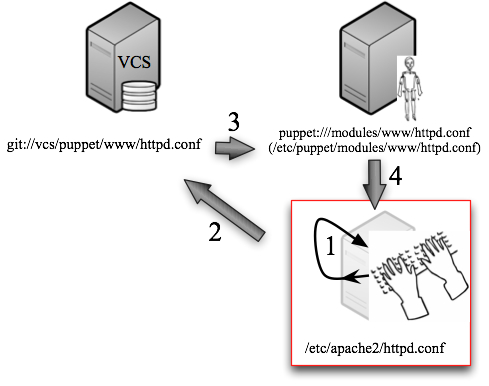

For the in situ option, the development process has a little less friction. Make a change, tell the web server to load the config. Test. Make another change, reload. It works. Check changes back into VCS so the correct version will be managed via Puppet when you’ve got a version of the config you like. Here’s a diagram of this option:

The places you get into trouble with this option are:

a) remembering to put the config back into Puppet

b) making sure puppet doesn’t stomp on the work you are doing while you are doing it

c) you’ve introduced a change in the “control flow” path that the web server config file took to get to the machine. Because we edited it in place, that change didn’t get to the machine via Puppet. As a result it bypassed any of the processing Puppet might have done to select/produce that file (e.g. templating).

Each of these trouble areas has its own peril:

- Humans are fallible, so for a) you run the risk of version skew if you forgot to check your changes back in8.

- Having to stop Puppet on a machine has the obvious side effects if it is not restarted. But what about the case where your colleague has changed another file in Puppet which isn’t getting to that machine because you disabled Puppet there?

- Having a config file get to a machine in two different ways is bound to force you to eventually debug an issue with “the road less traveled,” I’ll lay money on the table that at some point you’ll find yourself puzzling over why a Puppet-sourced change to a file didn’t do something you expected.

The Version Control System Option

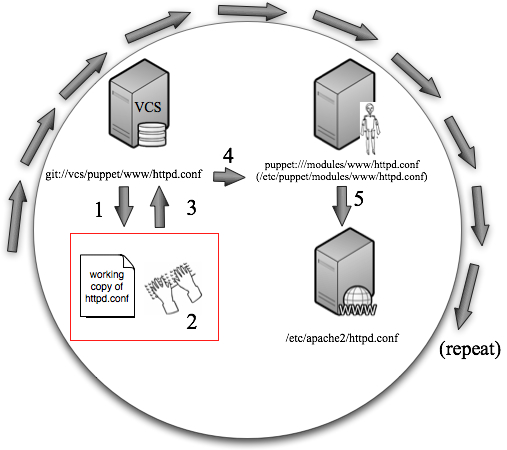

Now let’s look at the other option where we make all of our changes in some working copy and then push them to the VCS and then push them into Puppet so Puppet can get them on to the client. I intentionally used a run-on sentence to demonstrate the multi-step complexity of this option. I call this the “spin the wheel” option because you essentially have to spin the entire giant wheel to get each (even trivial) change to boomerang back to you and be put in place on the web server:

To go this route, you need to have a method to quickly:

- get the VCS version of the file into the Puppet datastore (more on this in a moment) and

- get the Puppet client’s config pull to take place out of its normal sequence (not hard for a single machine, but it does need to only happen after #1 has successfully completed)9

One hidden drawback of this method which was pointed out to me by a Puppet Labs person is that your VCS changelog tends to get cluttered with trivial changes. If one of the goals of using a VCS in the first place is to have a change log where it is easy to determine what changes happen to your infrastructure over time, it gets annoying when the majority of log messages say “Typo…Typo…Damn…Fixed a spelling error…Almost got it…Nope, not yet…10

Another interesting consequence of this method comes when we’re working to develop a configuration which is not as localized as the one used in our example so far. Imagine we’re developing a configuration for a service that runs on all of your machines. You have to make sure that the “in development” changes aren’t prematurely propagated out to all of the machines. There are a number of ways you can do this, for example:

- change the Puppet config so the modified file is targeted to be specific to the machine, then change it so it becomes applicable to a larger scope. This means you are changing not only the service’s config, but also the configuration management system’s config. Best keep your wits about you.

- a less “one-off” variation of the previous idea is to use Puppet environments to label the machine you are working on as “special.” You then develop your configs first in a “special” place before moving them over to the standard location. You can also create a similar scheme using either Facter or an external node configuration. All of these methods force you to engineer your Puppet infrastructure to take this workflow into account11. But you have to have thought of this before hand.

Question 2: How Do Configs Get from the VCS Respository to the Puppet Datastore?

We almost touched on this in the last section, but I want to shine a more direct light on this dark little corner as well. This is the question of how you control the flow of data that resides in your version control system to the place where the Puppet server sees the data and can serve it to its clients. I’ve heard of three different ways people are doing this:

- A job gets run periodically on the Puppet server to “check out” any changes to the VCS repository. The Puppet server either looks into this working copy for its files or a subsequent part of the job copies12 the whole or just changed data into the Puppet server’s datastore.

- The VCS has a post-commit hook added to the repository so that when a magical commit takes place (e.g. the change is tagged in a particular way or the commit happens to a specific branch) the VCS copies the appropriate files to the Puppet datastore.

- The Puppet server itself gets taught how to be a client of the VCS system and to look right into the VCS tree (e.g. through a web interface via HTTP) for files with specific branches or tags each time it runs. An alternative version of this is to have the Puppet server consult a config file (e.g. that has version numbers in it) which describes the latest version of a branch of the VCS repository. If the config file holds a version number later than the one in the Puppet repository, a check out is performed.

As you can imagine, things get trickier when we try to run the options from Question #1 into the options for Question #2 and we don’t quite get the candy bar we’d hoped. Clearly some of the options for how the data gets from the VCS into Puppet are more amenable to “I just made a change, do it now!” than others.

So, What’s the Right Thing To Do?

Here’s the scenario we’re describing: “You have a config file you need to iteratively change. This config file is kept in a version control system and managed by a configuration management system like Puppet. How do you do it?”

Some of the ideas we discussed above seem more or less “hackish” to me, but none of them really describe a workflow I’m particularly enamored by. I assert that the scenario I described above is one of the most common things you would want to do with Puppet. Given that, I really want to be enamored by the workflow because it is something I’m going to have to do all the time. How can we make this better?

I’ve been pondering this question for a number of months. Here are a few larval ideas:

- closer integration between Puppet and (some abstract layer on top of the more popular VCS systems). There’s a lot that could be done if a Puppet server could have easy read/write access to the VCS.

- create a way for a Puppet client to have a different relationship with the server than the current dom/sub paradigm where the client meekly says “Got anything for me? and the server says “Yes, and you will take it, and use it to overwrite what you have.” This would be best if this different relationship could be temporary and just for a single file or directory. That way you may be able to say “take this web server config file and treat it specially. The client version will eventually be considered the canonical one. Scoop it up when I say I’m ready for it to return to the server.” This is one of the benefits of #1 above.

- another, perhaps simpler twist on the former item, provide a way to just a “lock this file from Puppet propagated changes” would help. It would certainly be nicer than “I’m taking Puppet down on this machine to avoid having my changes overwritten.”

I don’t think I’ve gotten close to licking this problem with these ideas, so I’d really like to discuss this with people who want to grapple with the same problem. Please feel free to comment on this post or get in touch with me via the comment form here on the site. Let’s talk about it.

The plot thickens… see the next post in this series.

- and most other config mgmt tools, so even if you use another config mgmt system, I’d still love your input [↩]

- for the purpose of this discussion, it does not matter whether or not this web server is considered production or dev, though if it is the former this question becomes a bit more interesting. [↩]

- or the Puppet client is run every N minutes from cron [↩]

- vs. some automated generation of that configuration [↩]

- we could play beat the clock, but that seems treacherous. It causes people to have to pay attention to an unnecessary time limit. If they get up to pee, they risk having to revert their changes from the backup copy Puppet leaves behind. [↩]

- as an aside, bcfg2 handles this situation really nicely. It knows how to specify that the changed copy on local disk should now be considered the true version and to suck that change back into the bcfg2 configuration with a minimal amount of effort. [↩]

- you did remember to restart it, right? [↩]

- yes, you can and probably should run some sort of Puppet dry-run report that would tell you if you blew it, but why set up a situation that is bound to fail due to human error? [↩]

- however, it is a non-trivial undertaking if you need that push to happen on multiple machines. Yes, something like mcollective makes the multiple machine part easy. The hard part is matching the business logic Puppet will use to determine which machines should get that new config in something else outside of Puppet so the push happens only on the required machines. [↩]

- yes, various VCS systems have ways to bundle/edit commit log messages, but then the burden shifts to the humans to keep their their commit logs tidy or to go back and edit them. And we all know how that story ends. [↩]

- perhaps not such a bad thing [↩]

- cp, rsync, what have you [↩]

{ 0 comments… add one now }